For decades, the trajectory of computing power was relatively straightforward: pack more transistors onto a silicon die, increase the clock speed of the Central Processing Unit (CPU). Let Moore’s Law handle the rest. When graphical and parallel processing demands surged. The Graphics Processing Unit (GPU) stepped up, eventually becoming the undisputed workhorse of the early artificial intelligence boom.

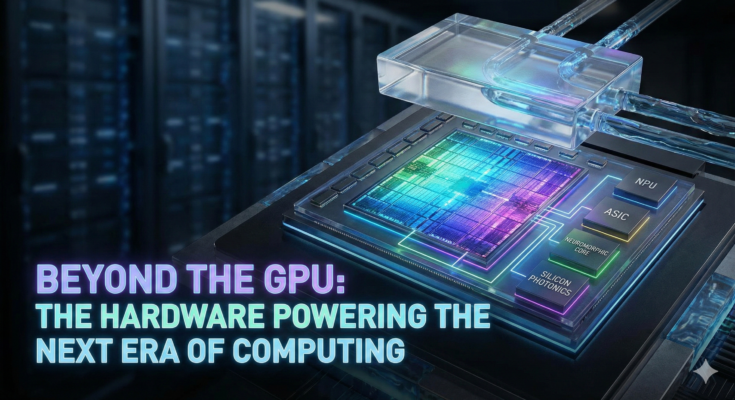

However, we are now entering a new paradigm. The massive processing demands of modern software, generative AI, and deep learning have pushed traditional CPU and GPU architectures to their physical and thermal limits. In response, US hardware engineering is undergoing a radical transformation. From specialized consumer silicon to brain-inspired enterprise data centers, the industry is moving aggressively beyond the GPU.

Here is a deep dive into the next-generation computing hardware that is engineering the future of technology.

The Bottleneck: Why CPUs and GPU Are No Longer Enough

To understand where hardware is going, we must understand the limitations of where it is today.

- The CPU: Designed for sequential processing, CPUs are the ultimate generalists. They handle a few complex operations incredibly fast (scalar arithmetic), but they choke when asked to perform millions of simultaneous calculations.

- The GPU: GPUs solved this by utilizing thousands of smaller cores to perform parallel processing (vector and matrix arithmetic). This made them perfect for rendering graphics and training early deep learning models.+1

However, as AI models scale to trillions of parameters, GPUs present a massive thermal and energy problem. They consume exorbitant amounts of power, requiring immense cooling infrastructure—increasingly liquid cooling—just to prevent the silicon from melting. Furthermore, GPUs are still general-purpose accelerators; they carry architectural “baggage” that isn’t strictly necessary for executing specific AI inference tasks. To achieve sustainable scaling, silicon must become highly specialized.+2

Enter the NPU: The Revolution in Consumer PC Builds (GPU)

One of the most immediate shifts in consumer hardware is the integration of the Neural Processing Unit (NPU). While cloud servers handle the heavy lifting of training massive AI models, laptops and desktops are increasingly required to handle “on-device AI” or local inference.+1

Unlike traditional processors, NPUs are purpose-built to execute the repetitive mathematical matrix operations essential for machine learning, doing so with a fraction of the power consumption of a GPU. Both Intel (with Intel AI Boost in Core Ultra chips) and AMD (with Ryzen AI using XDNA architecture) are now baking NPUs directly into their processors.+1

For PC hardware enthusiasts putting together a meticulously planned rig—the kind of system you might consider “the chosen one” that will last for years. NPUs are becoming a non-negotiable component. By offloading background AI tasks like real-time video upscaling. Smart background blurring, or natural language processing, the NPU frees up the primary GPU and CPU. This is a massive advantage for complex setups.

For instance, if you are driving a demanding dual-monitor configuration—such as running a heavy application or game on a high-refresh-rate 1440p main display while managing streams or videos on a secondary 1080p screen. The GPU can dedicate its full thermal and compute budget to rendering high-fidelity graphics. While the NPU quietly and efficiently handles the AI workloads in the background without causing frame drops or thermal throttling.+1

Chiplet Architecture: Shattering the Monolithic Die

For years, processors were manufactured as a single, monolithic piece of silicon. But as chips grew larger to accommodate more cores and cache, manufacturing defects became costlier, and yields dropped. US hardware engineers are solving this through chiplet architecture.

Instead of building one massive chip, companies are designing smaller, modular “chiplets” that are manufactured independently and then stitched together onto a single substrate using advanced packaging techniques (like Intel’s Foveros 3D stacking).

- Higher Yields: Smaller silicon dies have fewer defects, making manufacturing vastly more efficient.

- Heterogeneous Computing: Chiplets allow engineers to mix and match process nodes. You can have a cutting-edge 3nm CPU chiplet sitting right next to an older. Cheaper 7nm I/O (Input/Output) chiplet, and an NPU chiplet, all communicating at lightning speed within the same package.

This modular approach is the key to scaling consumer CPUs and enterprise accelerators alike, allowing hardware to be custom-tailored for specific workloads without redesigning an entire monolithic die from scratch.

ASICs and TPUs: The Enterprise Heavyweights

At the enterprise level, where companies are deploying AI to millions of users, general-purpose GPUs are being supplemented—and in some cases replaced—by Application-Specific Integrated Circuits (ASICs).

An ASIC is a chip designed from the ground up for one specific task. In the realm of AI, the most famous ASIC is Google’s Tensor Processing Unit (TPU), alongside Amazon’s Inferentia chips.+1

TPUs are specifically optimized for the tensor operations that underpin deep learning networks. Because they strip away the graphical rendering hardware found in GPUs. TPUs can dedicate all their silicon real estate to low-precision arithmetic (like 8-bit integers) and minimizing data movement. This specialization results in unmatched performance-per-watt when running AI inference at scale, making them the silent backbone of modern cloud computing and massive language models.+1

Neuromorphic Computing: Mimicking the Human Brain

Perhaps the most radical departure from traditional CPU/GPU paradigms is neuromorphic computing. While modern AI software loosely mimics the brain’s neural networks, neuromorphic hardware physically mimics the brain’s biological structure.

Traditional computers operate synchronously; a master clock dictates when data moves, meaning the system consumes power continuously, even when idle. Neuromorphic chips, such as Intel’s Loihi 2 or IBM’s TrueNorth and Hermes, use Spiking Neural Networks (SNNs).+1

- Event-Driven Processing: Just like neurons in the human brain, artificial neurons in a neuromorphic chip only “fire” (consume power) when a specific threshold of data is reached. If there is no stimulus, there is no power draw.

- Colocated Memory and Compute: In traditional hardware, data constantly moves back and forth between the processor and the RAM (the Von Neumann bottleneck). Neuromorphic chips use specialized components (like Phase-Change Memory devices) where the memory and the computing logic are intertwined, exactly like biological synapses.

This architecture offers stunning advantages. Neuromorphic systems can process sensor data, run pattern recognition, and execute real-time continuous learning while consuming a tiny fraction of the power of a standard GPU. This makes them the holy grail for robotics, autonomous vehicles, and edge computing. Where real-time decisions must be made without draining a battery or waiting for a cloud server to respond.+1

The Hidden Layer: Silicon Photonics

As compute density increases, moving electrical signals through copper wires creates immense heat and latency. The next frontier in hardware engineering is silicon photonics—using light instead of electricity to transmit data within and between chips.

By integrating microscopic lasers and optical components directly onto the silicon, data can travel at the speed of light with virtually no heat generation and massive bandwidth. When combined with advanced liquid cooling microfluidics, silicon photonics is allowing engineers to build tightly packed, exascale AI data centers that would have literally melted down if built with traditional copper interconnects.

Conclusion to GPU

The era of relying solely on faster CPUs and bigger GPUs is over. The next decade of computing will be defined by highly specialized, heterogeneous hardware. From NPUs accelerating everyday tasks on your personal rig to custom chiplets, enterprise ASICs, and brain-inspired neuromorphic processors, US hardware engineering is fundamentally rewriting the rules of silicon. These innovations are not just making computers faster; they are making them radically more efficient, paving the way for the intelligent edge, sustainable data centers, and the true integration of AI into every facet of digital life.